‘Some half-baked conceptual thoughts about neuroscience’ alert

In the book Snow Crash, Neil Stephenson explores a future world that is being infected by a kind of language virus. Words and ideas have power beyond their basic physical form: they have the ability to cause people to do things. They can infect you, like a song that you just can’t get out of your head. They can make you transmit them to other people. And the book supposes a language so primal and powerful it can completely and totally take you over.

Obviously that is just fiction. But communication in the biological world is complicated! It is not only about transmitting information but also about convincing them of something. Humans communicate by language and by gesture. Animals sing and hiss and hoot. Bacteria communicate by sending signaling molecules to each other. Often these signals are not just to let someone know something but also to persuade them to do something else. Buy my book, a person says; stay away from me, I’m dangerous, the rattlesnake says; come over here and help me scoop up some nutrients, a bacteria signals.

And each of these organisms are made up of smaller things also communicating with each other. Animals have brains made up of neurons and glia and other meat, and these cells talk to each other. Neurons send chemicals across synapses to signal that they have gotten some information, processed it, and just so you know here is what it computed. The signals it sends aren’t always simple. They can be exciting to another neuron or inhibiting, a kind of integrating set of pluses and minuses for the other neuron to work on. But they can also be peptides and hormones that, in the right set of other neurons, will set new machinery to work, machinery that fundamentally changes how the neuron computes. In all of these scenarios, the neuron that receives the signal has some sort of receiving protein – a receptor – that is specially designed to detect those signaling molecules.

This being biology, it turns out that the story is even more complicated than we thought. Neurons are cells and just like every other cell it has internal machinery that uses mRNAs to provide instructions for building the protein machinery needed to operate. If you need more of one thing, the neuron will synthesize more of the mRNA and transcribe it into new proteins. Roughly, the more mRNA you have the more of that protein – tiny little machines that live inside the cell – you will produce.

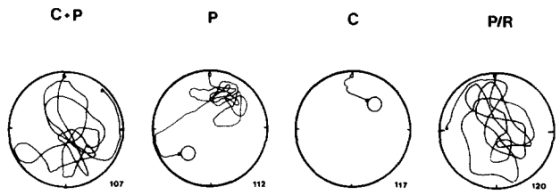

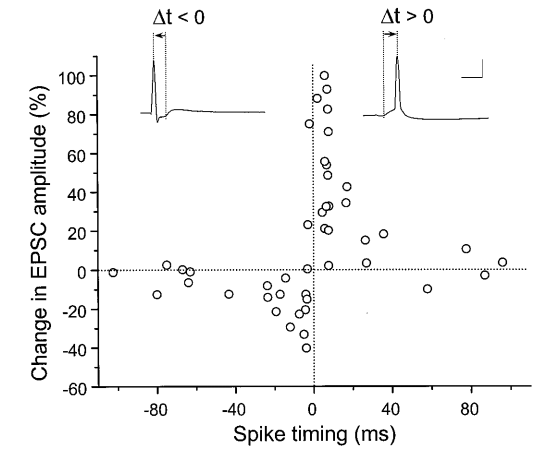

This synthesis and transcription is behind much of how neurons learn. The saying goes that the neurons that fire together wire together, so that when they respond to things at the same time (such as being in one location at the same time you feel sad) they will tend to strengthen the link between them to create memories. And the physical manifestation of this is transcribing proteins for a specific receptor (say) so that now the same signal will activate more receptors and result in a stronger link.

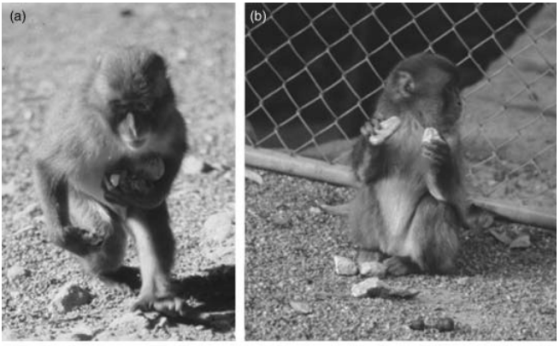

And that was pretty much the story so far. But it turns out that there is a new wrinkle to this story: neurons can directly ship mRNAs into each other in a virus-like fashion, avoiding the need for receptors altogether. There is a gene called Arc which is involved in many different pieces of the plasticity puzzle. Looking at the sequence of the gene, it turns out that there is a portion of the code that creates a virus-like structure that can encapsulate RNAs and bury through other cells’ walls. This RNA is then released into the other cell. And this mechanism works. This Arc-mediated signaling actually causes strengthening of synapses.

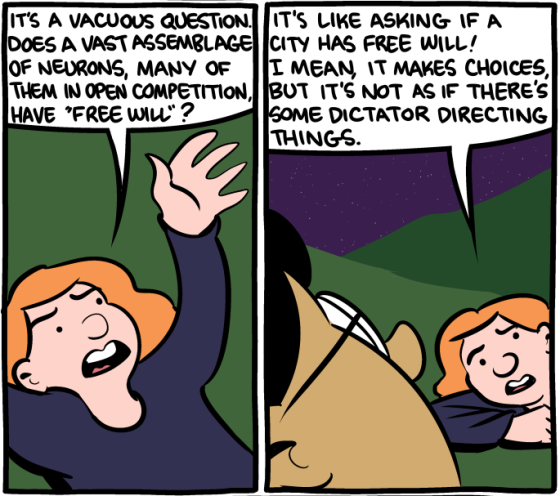

Who would have believed this? That the building blocks for little machines are being sent directly into another cell? If classic synaptic transmission is kind of like two cells talking, this is like just stuffing someone else’s face with food or drugs. This isn’t in the standard repertoire of how we think about communication; this is more like an intentional mind-virus.

There is this story in science about how the egg was traditionally perceived to be a passive receiver during fertilization. In reality, eggs are able to actively choose which sperm they accept – they have a choice!

The standard way to think about neurons is somewhat passive. Yes, they can excite or inhibit the neurons they communicate with but, at the end of the day, they are passively relaying whatever information they contain. This is true not only in biological neurons but also in artificial neural networks. The neuron at the other end of the system is free to do whatever it wants with that information. Perhaps a reconceptualization is in order. Are neurons more active at persuasion than we had thought before? Not just a selfish gene but selfish information from selfish neurons? Each neuron, less interested in maintaining its own information than in maintaining – directly or homeostatically – properties of the whole network? Neurons do not simply passively transmit information: they attempt to actively guide it.