From Rajiv Sethi:

As shown by Aumann, two individuals who are commonly known to be rational, and who share a common prior belief about the likelihood of an event, cannot agree to disagree no matter how different their private information might be. That is, they can disagree only if this disagreement is itself not common knowledge. But the willingness of two risk-averse parties to enter opposite sides of a bet requires them to agree to disagree, and hence trade between risk-averse individuals with common priors is impossible if they are commonly known to be rational.

This may sound like an obscure and irrelevant result, since we see an enormous amount of trading in asset markets, but I find it immensely clarifying. It means that in thinking about trading we have to allow for either departures from (common knowledge of) rationality, or we have to drop the common prior hypothesis. And these two directions lead to different models of trading, with different and testable empirical predictions…

In a paper that I have discussed previously on this blog, Kirilenko, Kyle, Samadi and Tuzun have used transaction level data from the S&P 500 E-Mini futures market to partition accounts into a small set of groups, thus mapping out an “ecosystem” in which different categories of traders “occupy quite distinct, albeit overlapping, positions.”

One of our most striking findings is that 86% of traders, accounting for 52% of volume, never change the direction of their exposure even once. A further 25% of volume comes from 8% of traders who are strongly biased in one direction or the other. A handful of arbitrageurs account for another 14% of volume, leaving just 6% of accounts and 8% of volume associated with individuals who are unbiased in the sense that they are willing to take directional positions on either side of the market. This suggests to us that information finds its way into prices largely through the activities of traders who are biased in one direction or another, and differ with respect to their interpretations of public information rather than their differential access to private information…

If there’s a message in all this, it is that markets aggregate not just information, but also fundamentally irreconcilable perspectives.

I always find it shocking that economists would find it reasonable to assume that traders would have access to the same information or have the same priors. At the least, people have a strong path dependence: what you believe now is a function of what you believed ten minutes ago. And if we believe reinforcement-learning models – which I think it is obvious that we should – an individual’s history of payoffs and costs, however random, will contribute to a heterogeneous distribution of beliefs.

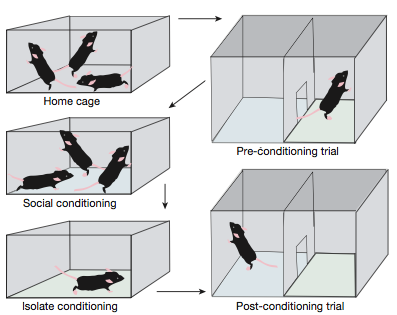

Of course, information sharing has been considered in ecology for a long time. In fact, information transfer between individuals is a kind of hot field right now: look into the literature on flocking and group dynamics for examples where researchers are trying to extract what and how information is transferred between individuals. A recent example, Iain Couzin’s whole lab, etc.

That being said, this is fantastic economic-level data.